I had a 16 node Azure HCI 23H2 based cluster that refused to get past the initial update, after several undocumented resolutions, decided to put together a series of posts with the solutions!

There is good documentation at the Microsoft site with all requirements for HCI clusters, be it Azure Stack, or Windows Storage Spaces Direct (S2D) clustering, so I will not be going into that, however, I came across the following challenges even though all solutions were properly configured.

The physical design in question is exactly as described in the Microsoft documentation at: Azure Local baseline reference architecture – Azure Architecture Center | Microsoft Learn

16 Node clusters based on 23H2, with 100Gb NICs and fabric

Nodes randomly dropping out of cluster

Nodes were unstable, with disks going offline, nodes going offline, lots of random behavior not allowing the initial update to succeed.

When looking at the Security Event log of the nodes, the following event ID 5152 and other 51xx occurred constantly on all nodes of the cluster:

Looking at this log entry, I see that cluster SMB VLAN 711 & 712, Protocol 17 (UDP), Port 3343 to and from the nodes is blocked by “WSH Default” (Windows Service Hardening), which to me, explained why nodes had disks going offline, and as a result, nodes going offline.

I created Inbound and Outbound manual rules to allow that traffic, however, the rule was ignored by all systems.

Most of these blog articles are fixes I came up with, and I wish I could claim this one, but in the case, I have to give credit to where credit is due, it was the Microsoft engineer Wai Kong who had me try something that to me, was a huge learning experience, and was so ‘out there’, I needed to learn how to the solution was found, which I will explain at the end.

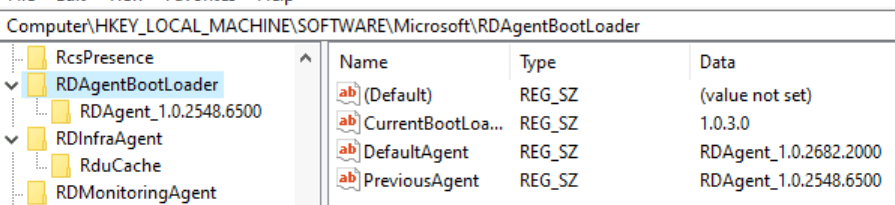

We checked the NICs in question using Get-NetAdapter, as shown in the following figure:

Next, we checked the full settings of the vSMB NIC with the Get-NetIPInterface command:

Note the “Dhcp” setting is ‘enabled’? Well, even though this vNIC has static addressing, the svchost.exe application was trying to get/use the DHCP configuration, and as a result, bypassing the firewall allow rule. How/Why? That’s a conversation for another time, the solution here was to disable it on all the nodes on the 2 ports in question (*Port 1 and *Port 2)

Here’s how I disabled it using the Set-NetIPInterface command:

Once this was run on all 16 nodes, (You can run the command remotely with Enter-PSSession) the errors stopped in System Event Logs, disks stopped going offline, and node stability was established!

How was this discovered?

The event tells you which process generates the message, tools are used to check the modules (DLLs or exe) in the process. In this case, the DHCP related DLL in the system was calling for the DHCP client service.